Shared Task

Building Compact Sinhala & Tamil LLMs

Date: 5th August 2025 Location: IIT City Office, Colombo 03 Time: 9:00 AM onwards

Are you ready to build something small that makes a big difference? As part of the “Small Models, Big Impact” Research Conclave, this shared task challenges university students and industry innovators to develop efficient, high-performing language models for Sinhala and Tamil that are light enough to run on devices and at the edge. Using open-source LLMs, participants will fine-tune or continue pre-training these models to better serve our local languages—making powerful AI more accessible, scalable, and locally relevant. Whether you’re passionate about language, excited by edge-AI, or looking to make your mark on the future of Sinhala and Tamil tech, this is your chance to learn, build, compete, and make a lasting impact.

1. Task Overview & Objectives

- Goal: Foster development of compact, high-quality LLMs for Sinhala and Tamil by continual pre-training or fine-tuning open-source models with ≤ 8 billion parameters.

- Impact: Empower local NLP research and applications—chatbots, translation, sentiment analysis, educational tools—while lowering computational and storage barriers.

- Who Should Participate:

- Students & Academic Teams: Showcase research on model adaptation, data augmentation, multilingual/multitask training.

- Industry & Startups: Demonstrate practical performance in real-world pipelines; optimise inference speed, resource usage.

2. Allowed Base Models

Participants must choose one of the following (or any other fully open-source LLM ≤ 8 B params):

| Model Name | Parameters | Notes |

| Llama 3 | 1B, 3B, 7B | Meta’s Llama series, particularly the smaller versions, is designed for efficiency and multilingual text generation. While the larger Llama models are more widely known, the 1B and 3B models offer a compact solution. Meta has also shown interest in addressing the linguistic diversity gap, which includes support for languages like Sinhala and Tamil. |

| Gemma | 2B, 4B | Developed by Google DeepMind, Gemma models are known for being lightweight yet powerful, with strong multilingual capabilities. Google has a strong focus on linguistic diversity, and Gemma’s architecture makes it a good candidate for adapting to less-resourced languages. |

| Qwen-2 | 0.5B, 1.5B, 7B | This family of models from Alibaba is designed for efficiency and versatility. Their strong multilingual pretraining makes them good candidates for adaptation to Sinhala and Tamil through fine-tuning. |

| Microsoft Phi-3-Mini | 3.8B | This model from Microsoft is highlighted for its strong reasoning and code generation capabilities within a compact size. While its primary focus isn’t explicitly on a wide range of South Asian languages, its efficient design and good general language understanding could make it a suitable base for fine-tuning with Sinhala and Tamil data. |

| Or … any other open-source checkpoint ≤ 8 B params | ||

Note: Proprietary or closed-license models (e.g., GPT-3 series, Claude) are not allowed.

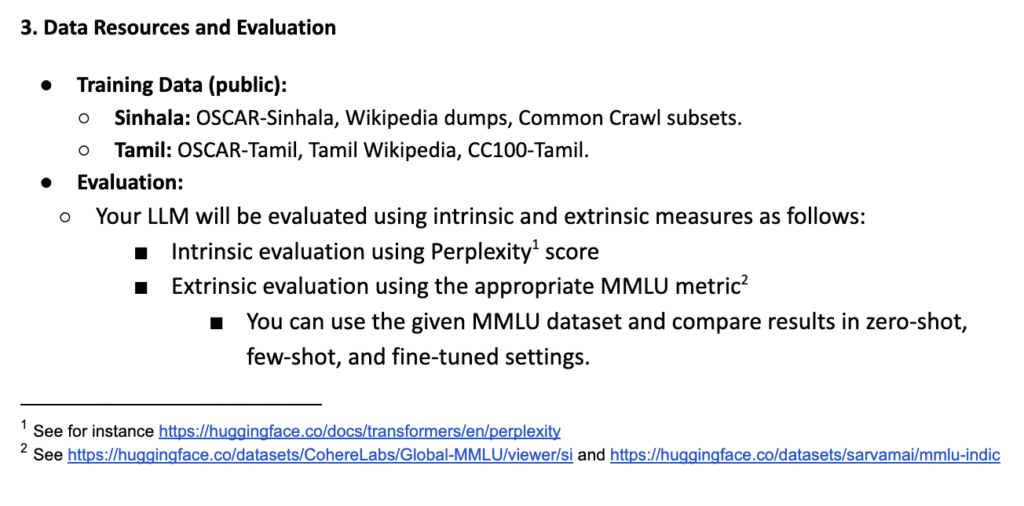

3. Data Resources and Evaluation

Perplexity: https://huggingface.co/docs/transformers/en/perplexity

MMLU Datasets: https://huggingface.co/datasets/CohereLabs/Global-MMLU/viewer/si and https://huggingface.co/datasets/sarvamai/mmlu-indic

4. Submission Requirements

- Model: HuggingFace-format upload.

- Scripts and Notebooks: Should be uploaded to a GitHub or HuggingFace repository.

- Technical Report (2-5 pages):

- Training details: data sources, training mechanism, epochs, batch size, learning rates.

- Resource usage: GPU time, list of hardware resources.

- Model evaluation.

- Analysis of strengths/limitations.

5. Timeline

| Milestone | Date |

| Task announcement date | 25th June 2025 |

| Deadline | 7th July 2025 |

| Introductory meeting | 9th July 2025 |

| Progress Meeting | 21st of July 2025 |

| Progress Meeting 2 | 30th July 2025 |

| Event Date | 5th August 2025 |

6. Prizes & Incentives

- Best Teams (for Sinhala & Tamil): Awards and winning certificates.

- All Participants: Certificate of participation.

7. Rules & Fairness

- Parameter Limit: Strict upper bound of 8 B parameters (model + adapter weights).

- Data Usage: Only public/open-license data; no private or web-scraped behind login.

- Reproducibility: All code, data-prep scripts, and logs must be publicly accessible by the submission deadline.

8. How to Register & Contact

- Registration Form: https://forms.gle/edzfpopVvKkkF6cH8

- Contact: iciit@iit.ac.lk

- Phone: 076 981 1289